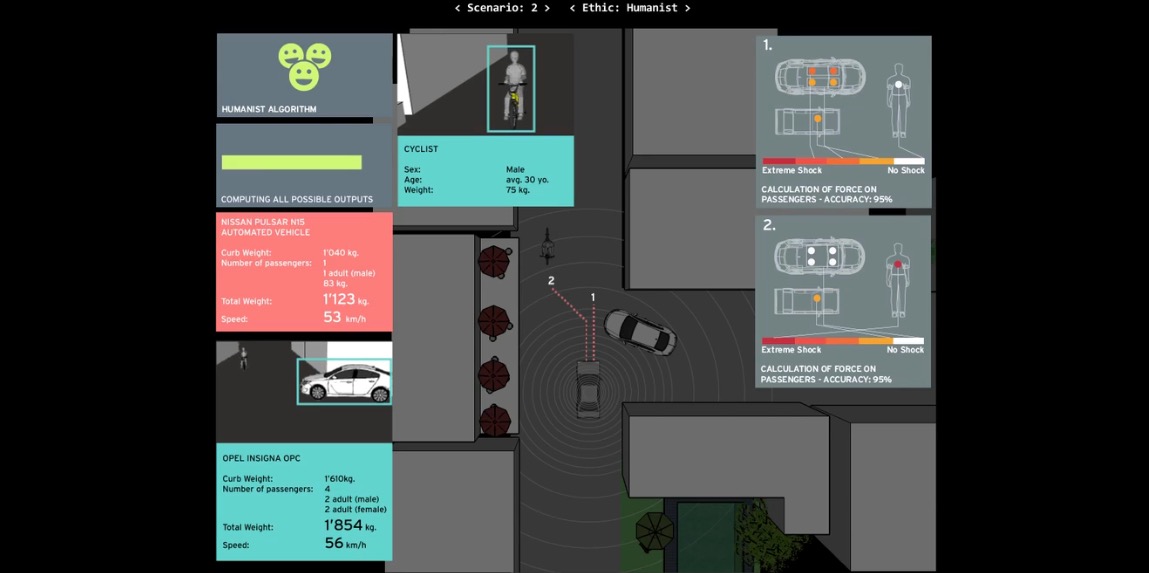

We tend to assume that humans are able to respond wisely in dynamically changing conditions. That a driver could make a choice whether to kill one person to save five other lives, when he finds himself in an infamous trolley dilemma, but how would a machine make such a moral decision? Driverless vehicles might prioritize human life, physical damage or insurance costs. What if the ethical code of our self-driving vehicles would become just another commodified feature to purchase and change at one’s will?

Article: Wishful Thinking

Amateur Cities

Ethical Autonomous Vehicles